Introduction

Hello everyone. In this post, we will dive into the string module of Pandas. We will learn that although we can achieve all the required task even without the module but using the module gives us the much-needed programming robustness and elegance.

We will learn,

- Pandas Category vs String

- Different operation with Pandas str module

- Performance comparison with a simple approach

Let's jump to the code

Understanding the String dtype

By default, the string data will be of the object type. We may explicitly define the dtype to string.

With an explicit definition in place, we can select all the string columns with select_dtypes

data_obj = pd.Series(["test"])

data_str = pd.Series(["test"], dtype='string') # Created with string dtype

data_obj.dtype, data_str.dtype

data_obj = pd.DataFrame(data = [{"A":"A1", "B":"B1"},{"A":"A2", "B":"B2"}])

data_obj.select_dtypes('object') # Output both the columns

data_obj.B = data_obj.B.astype('string')

data_obj.select_dtypes('string') # Output only column B

Code-explanation

All other parts of the code is quite trivial and self-explanatory.

The category type

If the string column is not a free text column or when the unique values are limited i.e. a nominal data type e.g. age, then using the category dtype has performance benefit.

Excerpt from the official document

If you have a Series where lots of elements are repeated (i.e. the number of unique elements in the Series is a lot smaller than the length of the Series), it can be faster to convert the original Series to one of type category and then use.str.<method> or .dt.<property>on that. The performance difference comes from the fact that, for Series of type category, the string operations are done on the .categories and not on each element of the Series.

Let's check a code,

dataset = pd.read_csv("/content/california-housing-prices.zip") #1

%timeit dataset.ocean_proximity.map(lambda x :x.replace('A', 'B')) #2

dataset.ocean_proximity = dataset.ocean_proximity.astype('category') #3

%timeit dataset.ocean_proximity.map(lambda x :x.replace('A', 'B')) #4

Code-explanation

#1 - We have loaded the famous California dataset which has a text column i.e.ocean_proximity

#2 - Got the average execution time on the text col for a simple operation

#3 -Changed the dtype ofocean_proximityto category

#4 -Got the new execution time for the same operation

Result

Output#1 -100 loops, best of 5: 5.75 ms per loop

Output#2 -1000 loops, best of 5: 374 µs per loop

We can see that the performance gain is almost x15 on 20K samples.

Let's check some frequent text operations

In this section, we will list the code for multiple very frequently used string operation. So you may keep these code snippet or function on your finger-tip

Calling regular string function with str attributes

To call the string functions on a Pandas column, we use the str attributes.

Let's call the upper, lower,len functions

data = pd.DataFrame(data = [{"A":"A1", "B":"B1"},{"A":"A2", "B":"B2"}])

# Lower

data.A.str.lower()

# Chaining of functions

data.A.str.lower().str.len()

# Strip

data.A.str.strip()

It has all the methods that are available for Python string. Here is the link for the List

Split function

We can use the split function in many ways. The most simple being to split on delimiter to create a DataFrame on the fly from a single column/series.

# Create a dummy data

data = pd.Series(["a_b_c", "c_d_e", "f_g_h"], dtype="string")

# Call the split function

data.str.split("_")

# Select the individual split with get or []

data.str.split("_").str.get(1)

data.str.split("_").str[1]

# You may create a DataFrame using the expand parameter

df = data.str.split("_", expand=True)

df.columns=['fname','mname','lname']

df

Code-explanation

All the parts of the code is quite trivial and self-explanatory but you must take a note of the last snippet. It can shorten multiple lines of code into just one line.

The replace function

replace is another most useful function. With str.replace, you can not only replace a simple string but also use regex to replace which makes it very powerful

# Replace

dataset = pd.read_csv("/content/california-housing-prices.zip")

# Simple replace

dataset.ocean_proximity.str.replace(" ", "_")

# Replace with regex - Mask the vowels

dataset.ocean_proximity.str.replace("[aeiou]", "X", regex=True, case=False) #1

Code-explanation

#1 - Setregex=Trueto use regex

#1 - Use thecaseflag to ignore case

All other parts of the code is quite trivial and self-explanatory.

Important excerpt from the official document

Warning: Some caution must be taken when dealing with regular expressions! The current behaviour is to treat single-character patterns as literal strings, even when regex is set to True. This behaviour is deprecated and will be removed in a future version so that the regex keyword is always respected.

Let's Slice the string

You can simply slice the column just the way we do on a simple string. We can do that either using the slice method or the [ ]

dataset = pd.read_csv("/content/california-housing-prices.zip")

# Remove the first and last char

dataset.ocean_proximity.str.slice(1,-1)

dataset.ocean_proximity.str[1:-1]

# Get a char

dataset.ocean_proximity.str[0]

Boolean method

The str attribute also contains all the necessary function which are required to get a boolean flag out of String e.g. if it is all Alphanumeric or contains other chars

# Startswith, Endswith

dataset.ocean_proximity.str.startswith('B')

dataset.ocean_proximity.str.startswith('Y')

# isalnum(), isalpha(), isspace(), islower(), isupper(), etc.

dataset.ocean_proximity.str.isalnum() # Check if all chars are Alphabet or Num

dataset.ocean_proximity.str.isalpha() # Check if all chars are Alphabet only

dataset.ocean_proximity.str.isspace() # Check if its space only

dataset.ocean_proximity.str.islower() # Check if all chars in Lower case

dataset.ocean_proximity.str.isupper() # Check if all chars in Upper case

String function on Index and Column

All these str functionalities are available on DataFrame index too. Columns are also an index.

It is very common to get a dataset with the columns name with spaces, mixed case. We can fix these issue in a simple one-liner

# Columns

!kaggle datasets download kumarajarshi/life-expectancy-who

dataset = pd.read_csv("/content/life-expectancy-who.zip")

# Remove left/right spaces, convert to lower case, Underscore instead of mid space

dataset.columns = dataset.columns.str.strip().str.lower().str.replace(" ", '_')

Code-explanation

All other parts of the code is quite trivial and self-explanatory.

Let's apply on the IMDB movie review dataset

Let's quikly build a DataFrame out of the raw IMDB movie dataset

# Data load i.e. Raw data

data = pd.read_csv("/content/review.csv")

data.review = data.review.astype('string')

data.sentiment = data.sentiment.astype('category')

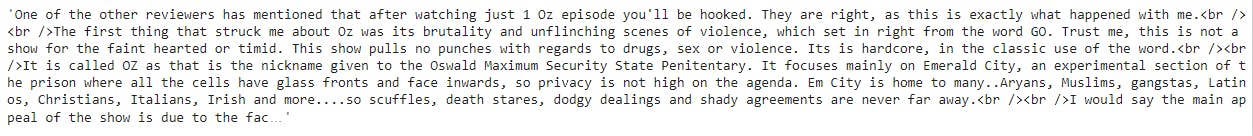

data.review[0]

Result

We will convert this text into a DataFrame with a columns for each word(tokens)

# Replace the "<br></br>> with blank

data.review = data.review.str.replace('<.*?>',' ', regex=True)

# Remove non-alphabets

data.review = data.review.str.replace('[^a-zA-Z]',' ', regex=True)

# Convert all to lower case

data.review = data.review.str.lower()

# Replace muliple blanks with single blanks

data.review = data.review.str.replace('[ ]+',' ', regex=True)

import itertools

flatten = itertools.chain.from_iterable

corpus = list(set(list(flatten(data.review.str.split(" ").tolist())))) #1

len(corpus)

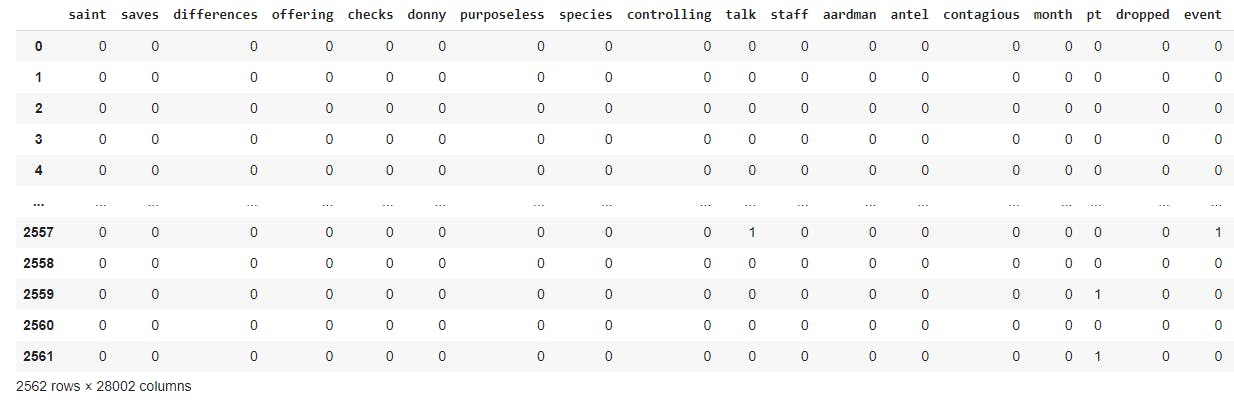

data_feat = pd.DataFrame(np.zeros(shape=(data.shape[0],len(corpus)))) #2

data_feat.columns = corpus

data_feat = data_feat.apply(lambda x: data.review.str.contains(x.name), axis=0) #3

data_feat = data_feat.replace({True:1,False:0}).iloc[:,1:] #4

Code-explanation

#1 - Get the unique list of all words(Tokens)

#2 - Create a blank DataFrame of size (rows,corpus length)

#3 -Map each col to True/False if it is available in Corpus

Replace True/False with 1/0

Result

We have the OneHotEncoded dataset ready to train a model. We need few more steps e.g. stop_words removal to make it optimum for any model but those steps are not in the scope of this post.

Conclusion

This was all for this post. With these tools in your hand, you can make your text related code robust and simple. Try to implement all the functions available in Pandas doc.

The alternate approach to achieve the same results without the .str attribute is using lambda and map i.e. dataframe.text_col.map(lambda x : x.lower())