Introduction

ChatGPT is a product that truly requires no introduction. Since its launch, its impact has been nothing short of remarkable [see image below ]. As someone who closely follows the AI research industry, I never imagined a scenario where a new product would not only challenge Google but also render it seemingly helpless.

It's worth noting that Google has been responsible for nearly every key research development in the past decade, which has propelled deep learning to the heights it enjoys today.

Jargons - OpenAI, ChatGPT, text-davinci-003

OpenAI is the AI Lab which has build and driven ChatGPT and underlying research and models.

ChatGPT is a conversational model created by OpenAI, built upon the foundation of the GPT architecture, which stands for "Generative Pre-trained Transformer." As a language model, its primary function is to predict the next word based on input. Significantly, ChatGPT has been trained using RLHF (Reinforcement Learning with Human Feedback), which elevates its capabilities beyond those of a traditional language model.

text-davinci-003 - TText-davinci-003 is the name of one of the text models within the OpenAI models suite. Alongside Davinci, OpenAI offers a variety of other text models as well as image models. To explore the most up-to-date list of available models, you can refer to the OpenAI website. [ Open AI models ]

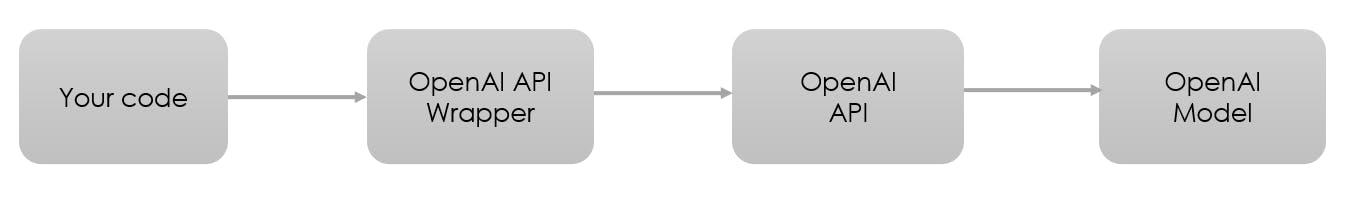

API Interface

Beyond the conversational interface of ChatGPT, users can also access ChatGPT results through its programming interface. To facilitate this, OpenAI provides Python and Node.js libraries for communication with their models [ Libraries ]. For other programming languages, community-supported libraries are available.

Although the API call blocks may appear similar to those used in other APIs, such as database calls, there are additional technical concepts that must be understood in order to effectively use the ChatGPT API. Let's take a brief look at these concepts:

Token - Token are the primary input for a language model. By definition, a token is the smallest unit in which a corpus is divided. You may think it of a word but sometimes it may be a sub-word e.g. Newer can have 2 tokens "New" and "er". This word division completely depend on the model and training setup.

OpenAI imposes a limitation on the number of tokens passed to the model. The combined count of tokens passed in the API call and the tokens returned in the response must be below the specified token limit. You may wonder how to accurately determine the number of tokens being passed. There are two approaches: first, simply count the words and stay well below the threshold; second, follow the rough rule of thumb from OpenAI's documentation, which states that 1 token is approximately equal to 4 characters or 0.75 words in English text

Prompts - An Language Model prompt is a text-based input given to a natural language processing model, such as GPT-3 or GPT-4. The prompt serves as an initial context or a request for the model to generate a relevant response or perform a specific task. The model uses its pre-trained knowledge and understanding of language patterns to process the prompt and generate contextually appropriate output. e.g.

Prompt example*: "Classify the sentiment of this movie review: 'I went to see the movie last night, and it was an incredible experience. The acting was superb, the visuals were stunning, and the story was engaging from start to finish. I highly recommend this movie to everyone.'"*

Jump-start example with Python

Let's build on the knowledge that we have just gained.

import openai

# Replace 'your_api_key' with your actual API key

openai.api_key = "your_api_key"

# The input sentence to be completed

input_sentence = "The best way to predict the future is to"

# Generate a completion using the DaVinci 003 model

response = openai.Completion.create(

engine="text-davinci-003",

prompt=input_sentence,

max_tokens=10)

# Extract the completed sentence from the response

completed_sentence = input_sentence + response.choices[0].text.strip()

print(completed_sentence)

code explanation

How to get the openai.api_key - check the next section

Engine is the model variant and version

max_tokens is the count of token in response

response is a json with following structure.

{ 'id': 'completion-XXXXXXXXXXXXX', 'object': 'completion', 'created': 1677649420, 'model': 'text-davinci-003', 'usage': { 'prompt_tokens': 6, 'completion_tokens': 10, 'total_tokens': 16 }, 'choices': [ { 'text': ' create it ourselves.', 'index': 0, 'logprobs': None, 'finish_reason': 'stop' } ] }So, basically get the "choice" key of the json which is a list. Get the first element i.e [0] of the list which is again a json. Get the "text" key of this json.

How to get openai.api_key

To work with OpenAI, we will need the API key and some $ in the account. OpenAI provides $ 18 to start with which is sufficient for all learning needs. Below is a guiding screen from my account.

Go to your "Personal" profile, click on "view API keys"

Click on "Create on new secret key" under "API keys"

This was all I have as a quick onboarding guide. Now lets jump to the use cases.

Use case - I

Making a Q & A bot from your own Content

Creating a bot that can answer questions based on a local document and engage in conversation has long been a desired use case. In the past, this required fine-tuning a language model to adapt to the local corpus.

However, with the OpenAI API, users can simply pass the content and set the prompt to answer questions based on that content. Though this may sound oversimplified, it's essentially accurate, with the exception of an additional step due to OpenAI's token length limit, which prevents passing the full corpus.

Variation on this use case

Variations of this use case arise depending on the nature of the document, but ultimately, they share the same core concept: providing a new tool to answer questions. Some examples of these variations include:

Answering questions from a PDF document

Answering questions from website content

Answering questions from audio, video (With some extra effort)

Use case - II

Summarizing a YouTube video

It is the next most cited use case of OpenAI on social media. This use case has gained popularity, as it allows users to get the gist of a video without watching the whole thing. It's particularly helpful when you're short on time or need to collect insights from multiple sources. This can be achieved using the speech to text Models e.g. Whisper and using the received transcript to the Language model to fetch the summary.

Here's steps to summarize a YouTube video:

Start by extracting the audio from the video.

Use a speech-to-text service API to transcribe the audio into text.

Feed the transcribed text to OpenAI's API, and request a summary of the content.

Summary

This was all for this post. We learnt, ChatGPT, an AI model developed by OpenAI that has made significant strides in the AI research industry, even challenging well-established giants such as Google. OpenAI offers various models, including text-davinci-003, and provides APIs for communication with these models.

It is essential to understand the concepts of tokens and prompts when using OpenAI's API. Two popular use cases for ChatGPT that covered were creating a Q&A bot from one's content and summarizing YouTube videos. With OpenAI, it becomes easy to build tailored solutions for various applications, truly revolutionizing the way we interact with AI.

In the next blog, we will learn the actual coding of the two use cases.

Off the Cuff

Things to do to dive deeper

Go through the document of OpenAI [ Official page ]

Create you OpenAI key and replicate the code, play with different models

Follow AI experts on Twitter

Some of my liked tweets from experts -